AI Web Crawling Bots: The Cockroaches of the Internet

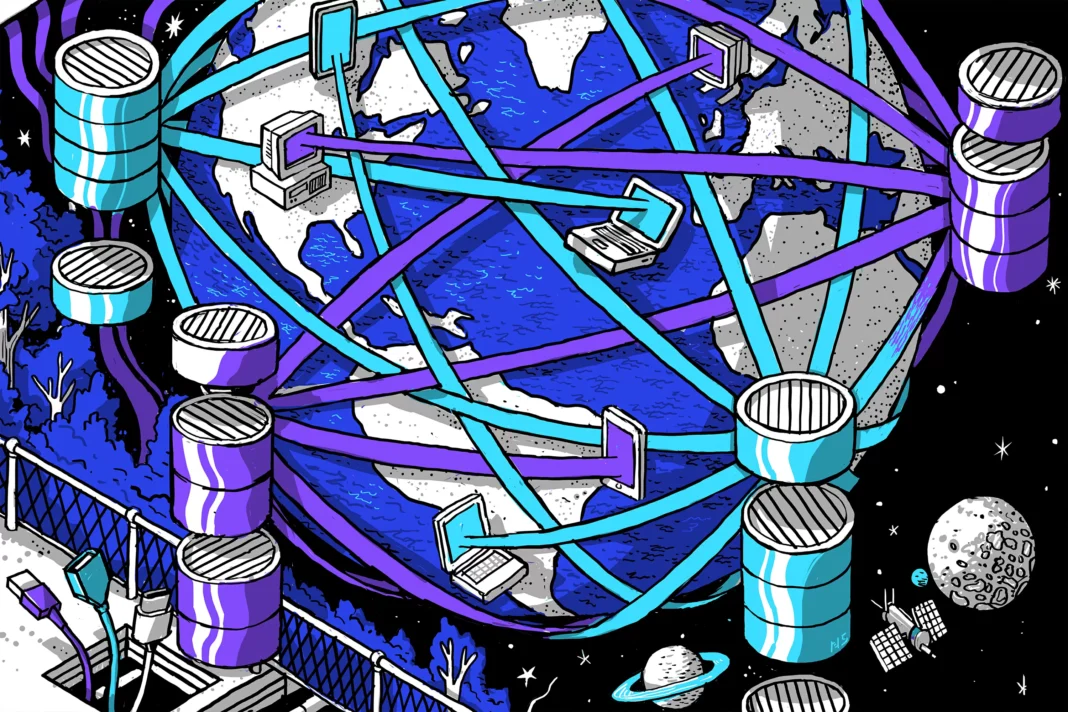

The internet is a vast and ever-expanding universe, with billions of websites and web pages. With such a vast amount of information available, it’s no surprise that bots have become a crucial tool for navigating and organizing this digital world. However, not all bots are created equal. While some are helpful and essential, others have gained a notorious reputation as the cockroaches of the internet – the AI web crawling bots.

These bots, also known as web spiders or web crawlers, are automated software programs designed to browse the internet and collect data from websites. They are used by search engines like Google to index web pages, which helps users find the information they need quickly. However, not all web crawling bots have good intentions. Some are designed to scrape data and content from websites for malicious purposes, such as spamming or stealing sensitive information.

As the use of AI web crawling bots continues to grow, many developers have started to view them as the cockroaches of the internet. Just like these pesky insects, web crawling bots can be found everywhere, constantly crawling and scraping data without any regard for the website owner’s consent. They can also be challenging to get rid of, just like cockroaches, as they can adapt and find new ways to access websites even after being blocked.

This issue has become a significant concern for website owners and developers, as web crawling bots can cause a range of problems. They can overload servers, slow down websites, and even cause crashes. Moreover, they can also lead to a loss of revenue for website owners, as they can steal content and use it for their own gain.

In response to this growing problem, many developers have taken a stand against AI web crawling bots. However, instead of using traditional methods like blocking IP addresses or using CAPTCHAs, they have come up with ingenious and humorous ways to fight back.

One popular approach is the use of “honey traps.” These are fake pages or links that are specifically designed to attract and trap web crawling bots. These pages may contain gibberish content, hidden links, or even fake data that can confuse and disrupt the bots. By luring the bots into these traps, developers can prevent them from accessing the actual content on their websites.

Another tactic used by developers is the use of “bot detection” games. These are interactive games or puzzles that require human intelligence to solve. By incorporating these games into their websites, developers can easily differentiate between human users and web crawling bots. This not only helps in preventing bots from accessing the website but also adds an element of fun for the users.

Some developers have also resorted to using “bot blockers,” which are codes that can detect and block web crawling bots in real-time. These blockers can identify bot behavior patterns and stop them from accessing the website, thus protecting the website’s content and resources.

But perhaps the most creative and humorous approach to fighting AI web crawling bots is the use of “bot traps.” These are hidden links or pages that are only accessible to web crawling bots. These links often lead to hilarious content or images, which can confuse and disrupt the bots. This not only provides entertainment for developers but also serves as a deterrent for future bot activity.

In the fight against AI web crawling bots, free and open-source software (FOSS) developers have played a significant role. These developers not only provide innovative solutions to tackle bots but also promote a culture of collaboration and sharing within the developer community. Many FOSS tools and plugins are available to help website owners and developers protect their websites from web crawling bots.

Moreover, the use of FOSS tools also promotes transparency and accountability, as the source code is open for everyone to see and review. This ensures that the tools are not being used for any malicious purposes and can be trusted by developers worldwide.

In conclusion, AI web crawling bots may have gained a notorious reputation as the cockroaches of the internet, but developers are fighting back with their ingenuity and humor. With the use of creative solutions and FOSS tools, they are taking a stand against these pesky bots and protecting the integrity of their websites. As the internet continues to evolve, it’s essential to have a collaborative and innovative approach towards tackling such challenges, and developers are leading the way in this fight against the cockroaches of the internet.